With the rapid advancement of artificial intelligence, unmanned systems such as autonomous driving and embodied intelligence are continuously being promoted and applied in real-world scenarios, leading to a new wave of technological revolution and industrial transformation. Visual perception, a core means of information acquisition, plays a crucial role in these intelligent systems. However, achieving efficient, precise, and robust visual perception in dynamic, diverse, and unpredictable environments remains an open challenge.

In open-world scenarios, intelligent systems must not only process vast amounts of data but also handle various extreme events, such as sudden dangers, drastic light environment changes at tunnel entrances, and strong flash interference at night in driving scenarios. Traditional visual sensing chips, constrained by the "power wall" and "bandwidth wall," often face issues of distortion, failure, or high latency when dealing with these scenarios, severely impacting the stability and safety of the system.

To address these challenges, the Center for Brain Inspired Computing Research (CBICR) at Tsinghua University has focused on brain-inspired vision sensing technologies, and proposed an innovative complementary sensing paradigm comprising a primitive-based representation and two complementary visual pathways. Inspired by the fundamental principles of the human visual system, this approach decomposes visual information into primitive-based visual representations. By combining these primitives, it mimics the features of the human visual system, forming two complementary and information-complete visual perception pathways.

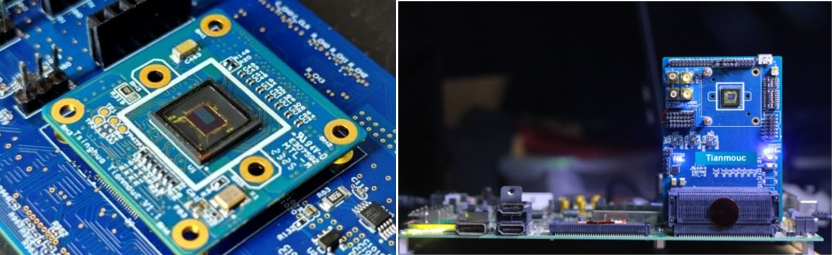

Based on this new paradigm, CBICR has developed the world's first brain-inspired complementary vision chip, "Tianmouc". This chip achieves high-speed visual information acquisition at 10,000 frames per second, 10-bit precision, and a high dynamic range of 130 dB, all while reducing bandwidth by 90% and maintaining low power consumption. It not only overcomes the performance bottlenecks of traditional visual sensing paradigms but also efficiently handles various extreme scenarios, ensuring system stability and safety.

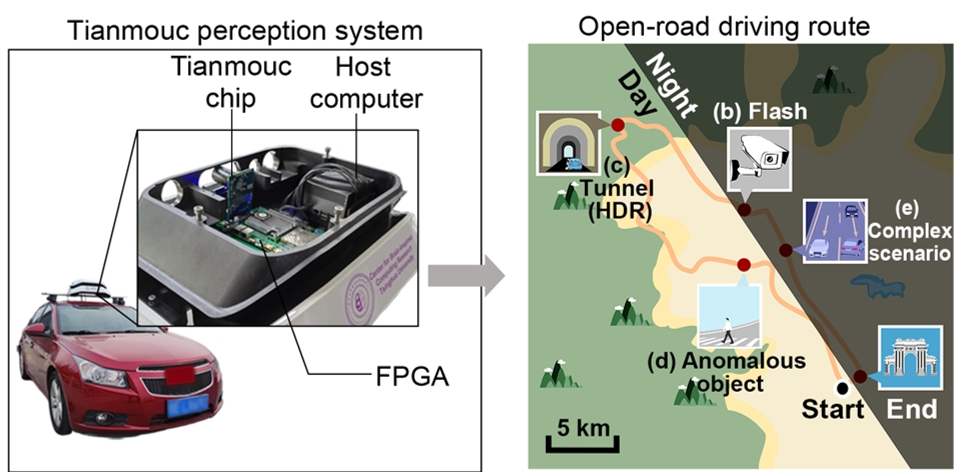

Leveraging the Tianmouc chip, the team has developed high-performance software and algorithms, and validated their performance on a vehicle-mounted perception platform running in open environments. In various extreme scenarios, the system demonstrated low-latency, high-performance real-time perception, showcasing its immense potential for applications in the field of intelligent unmanned systems.

The successful development of Tianmouc is a significant breakthrough in the field of visual sensing chips. It not only provides strong technological support for the advancement of intelligent revolution but also opens new avenues for crucial applications such as autonomous driving and embodied intelligence. Combined with CBICR's established technological foundation in brain-inspired computing chips like "Tianjic", toolchains, and brain-inspired robotics, the addition of Tianmouc will further enhance the brain-inspired intelligence ecosystem, powerfully driving the progress of artificial general intelligence.

The research paper based on these results, "A Vision Chip with Complementary Pathways for Open-world Sensing," was featured as the cover article of Nature in the May 30, 2024 issue. This marks the second time the team has been featured on the cover of Nature, following their earlier work on the hybrid-paradigm brain-inspired computing chip "Tianjic." This achievement signifies foundational breakthroughs in both brain-inspired computing and brain-inspired sensing.

The cover image of the May 30, 2024 issue of Nature

A Tianmouc chip (left) and a perception system (right)

The demo platform for autonomous driving perception

The corresponding authors are Professors Luping Shi and Rong Zhao from the Department of Precision Instruments, Tsinghua University. The co-first authors are Dr. Zheyu Yang (graduated Ph.D. from the Department of Precision Instruments, Tsinghua University; currently Research and Development Manager at Beijing Lynxi Technology Co., Ltd.), Taoyi Wang and Yihan Lin (Ph.D. candidates from the Department of Precision Instruments, Tsinghua University). The first affiliation is Tsinghua University, and the collaborative affiliations include Beijing Lynxi Technology Co., Ltd.

This research was supported by the STI 2030—Major Projects, National Nature Science Foundation of China, and the IDG/McGovern Institute for Brain Research at Tsinghua University.

Paper link: https://www.nature.com/articles/s41586-024-07358-4

Editors: Li Han, Guo Lili