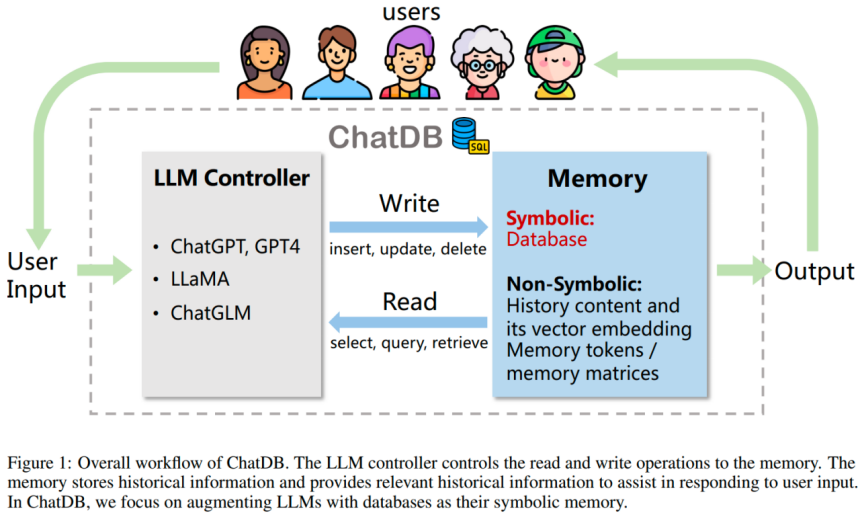

While large language models (LLMs) get more capable in language understanding and knowledge reasoning, one of the issues is handling long contexts. Recently, Prof. Zhao Hang’s research group proposes a novel framework, ChatDB, augmenting LLMs with symbolic memory to improve the memory and complex reasoning capabilities. This approach proves to have surpassed ChatGPT in complex multi-hop reasoning.

This research has made several contributions to the field of LLMs: firstly, it augments LLMs with databases as external symbolic memory, allowing for structured storage of historical data and enabling symbolic and complex data operations using SQL statements. In addition, the proposed “chain-of-memory” approach enables effective memory manipulation by converting user input into multi-step intermediate memory operations, which empowers ChatDB to handle complex, multi-table database interactions with higher accuracy and stability. At last, the experiments demonstrate that augmenting LLMs with symbolic memory improves multi-hop reasoning capabilities and prevents error accumulation, thereby enabling ChatDB to outperform ChatGPT on a synthetic dataset.

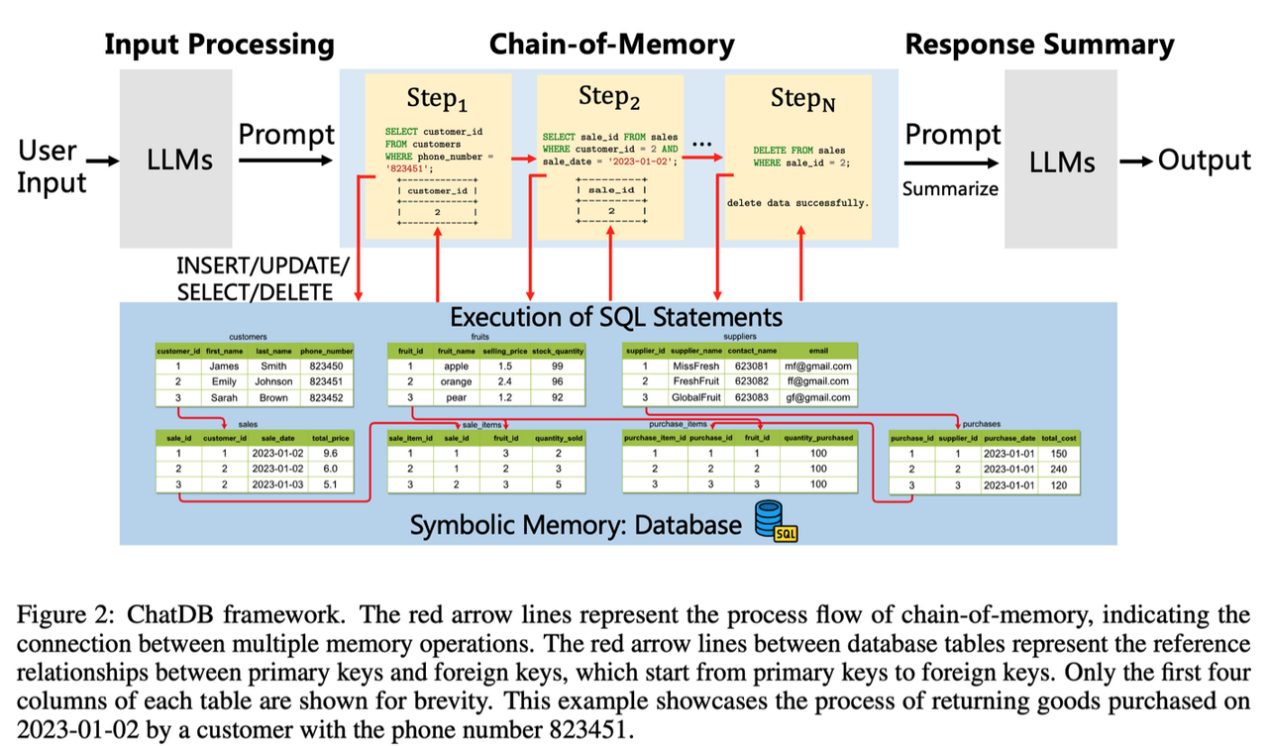

The ChatDB framework consists of three main stages: input processing, chain-of-memory, and response summary. In the “input processing”, ChatGPT generates a series of intermediate steps to manipulate the symbolic memory by LLMs based on users’ input. When it comes to the “chain of memory”, ChatDB manipulates the symbolic memory in sequence according to a series of previously generated SQL statements, including operations such as insert, update, select, delete, etc. In the last step, ChatDB summarizes the final response to the user based on the results of a series of chain-of-memory steps.

In the article, researchers test ChatDB with a synthesized dataset of fruit shop management records. Their results demonstrate that ChatDB can outperform ChatGPT on questions requiring multi-hop reasoning and precise calculations, which underscores the advantage of utilizing a database as symbolic memory.

The corresponding authors of the paper are IIIS Assistant Professor Hang Zhao and Beijing Academy of Artificial Intelligence researcher Jie Fu. The Co-first authors are Jie Fu and IIIS PhD student Chenxu Hu. Other authors are IIIS PhD students Chenzhuang Du and Junbo Zhao. This research has been collected in Cornell University arXiv and re-posted on Twitter by renowned machine learning researchers such as Elvis Saravia.

Paper link: https://arxiv.org/abs/2306.03901

Project page: https://chatdatabase.github.io

Source code: https://github.com/huchenxucs/ChatDB

Editor: Li Han