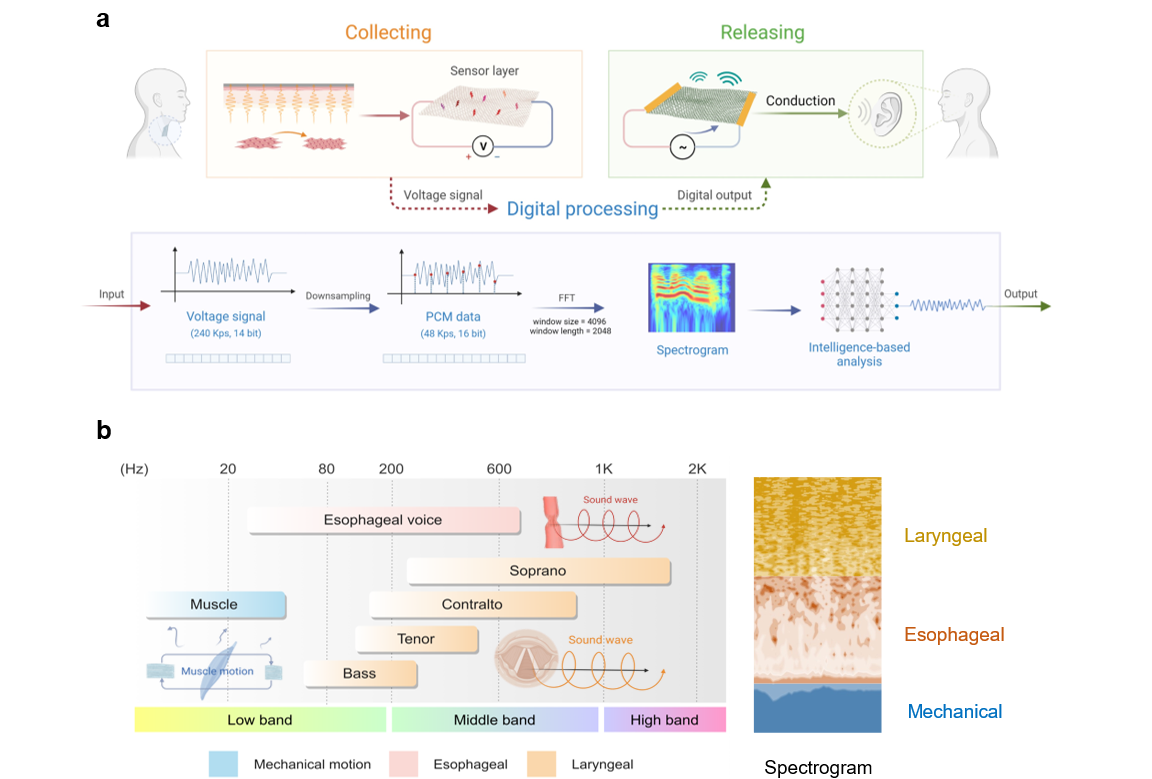

A team of researchers from Tsinghua University’s School of Integrated Circuits, led by Professor Ren Tian-ling, has made significant progress in intelligent speech interaction. They have developed a wearable artificial throat that can sense the multi-modality mechanical signals related to the vocalization of the larynx for speech recognition; The device can also generate sound based on thermoacoustic effects for speech interaction. The research results provide a new technical approach for speech recognition and interactive systems.

Speech interaction paradigm based on the intelligent wearable artificial throat

Speech is an important way of human communication, but the health status of the speaker (such as voice disorders caused by neurological diseases, cancer, trauma, etc.) and the surrounding environment (noise interference, transmission media) often affect the transmission and recognition of sound. Researchers have been improving speech recognition and interaction technologies to cope with weak sound sources or noisy environments. Multi-channel acoustic sensors can significantly improve the accuracy of sound recognition, but result in larger device sizes. Wearable devices, on the other hand, can acquire high-quality raw speech or other physiological signals. However, there is currently no sufficient evidence to show that the movement patterns of the laryngeal muscles and the vibrations of the vocal organs reflected on the body surface imply recognizable speech features, and there is no experiment to prove its completeness as a speech recognition technology.

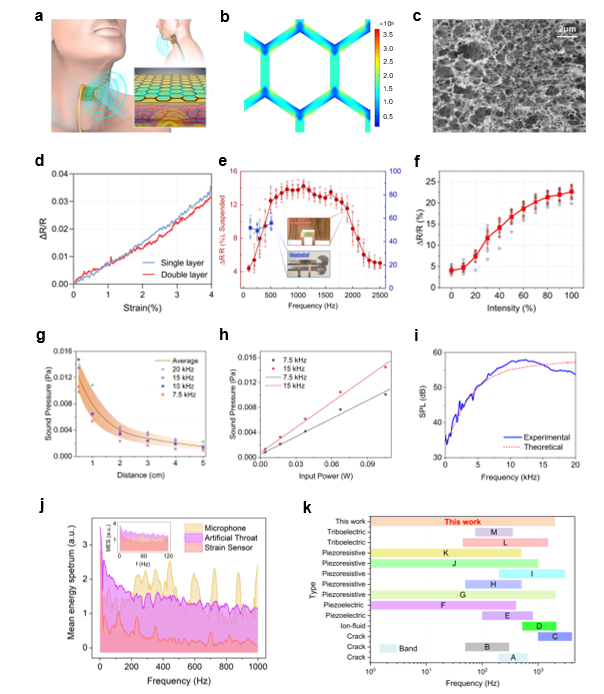

Design and characterization of the artificial throat device

Design and characterization of the artificial throat device

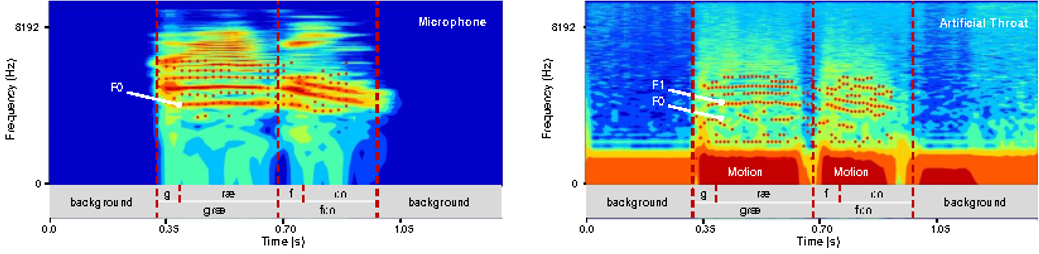

Speech annotation and formant feature analysis collected by the artificial throat device and a commercial microphone.

To address this issue, members of Professor Ren Tian-ling's team developed a graphene-based intelligent wearable artificial throat (AT). Compared with commercial microphones and piezoelectric films, the artificial throat has high sensitivity to low-frequency muscle movement, medium-frequency esophageal vibration and high-frequency sound wave information (Figure 1 & Figure 2), and also has anti-noise speech perception ability (Figure 2). The perception of mixed-modality acoustic signal and mechanical motion enables the artificial throat to obtain a lower speech fundamental frequency signal (F0) (Fig. 3). In addition, the device can also realize the sound generation through the thermoacoustic effect. The simple fabrication process, the stable performance, and the feasible integration make the artificial throat a new hardware platform for speech recognition and interaction.

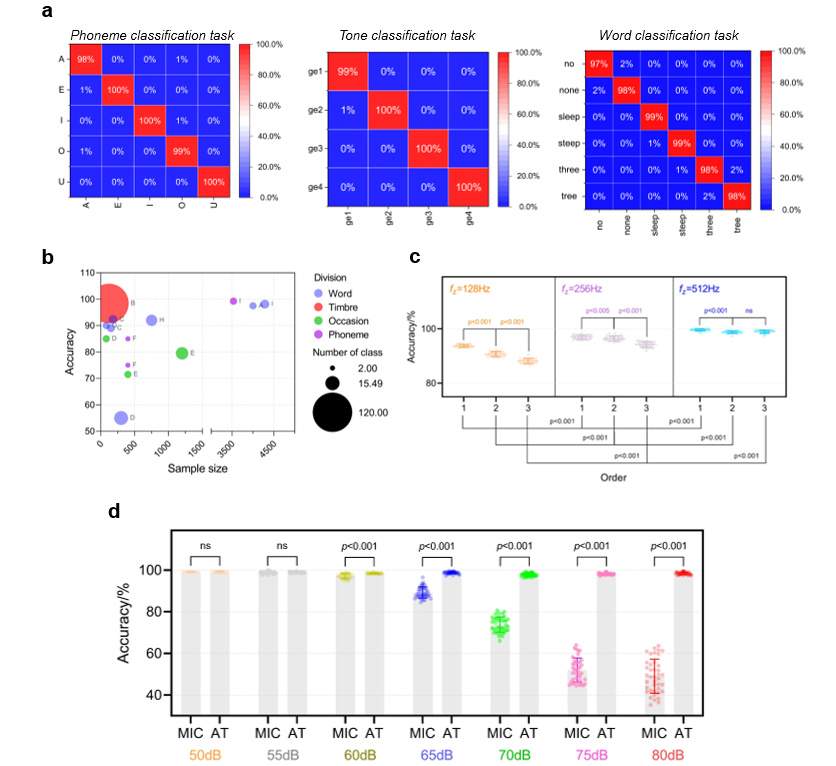

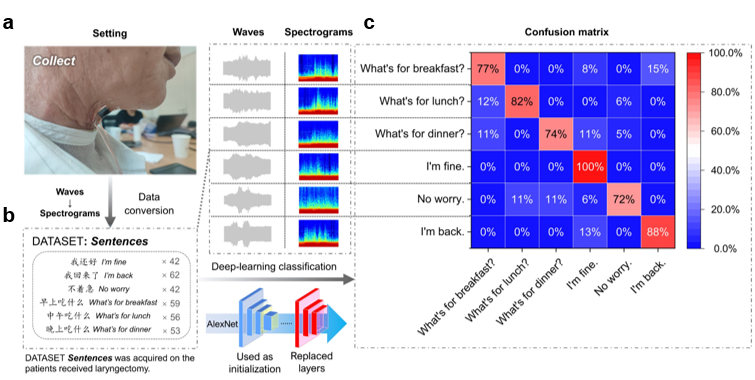

Speech recognition performance of the artificial throat

The research team also used the artificial intelligence model to perform speech recognition and synthesis on signals sensed by the artificial throat, achieving high-precision recognition of basic speech elements (phonemes, tones and words), as well as recognition and reproduction of fuzzy speech in a laryngectomy rehabilitation participant, providing an innovative solution for the communication and interaction of the sound-impaired. Experimental results show that the mixed-modal speech signals collected by the artificial larynx can recognize basic speech elements (phonemes, tones, and words) with an average accuracy of 99.05%. At the same time, the anti-noise performance of the artificial throat is significantly better than that of the microphone, and it can still maintain the recognition ability in the environment noise above 60 dB. Professor Ren Tian-ling's research team further demonstrated its voice interactive application. By integrating an AI model, the artificial throat was able to recognize everyday words uttered vaguely by a laryngectomy patient with more than 90 percent accuracy. The recognized content is synthesized into speech and played on the artificial larynx, which can initially restore the participant's speech communication ability.

A laryngectomy rehabilitation participant uses the wearable artificial throat for speech interaction.

According to the research team, the development of the artificial throat provides an innovative speech recognition and interaction solution for the voice impaired, and a new hardware platform for the next generation of speech recognition and interaction systems. There is a lot of room for optimization and expansion of the artificial larynx, such as improving the quality and volume of the voice, increasing the variety and expression of the voice, and combining other physiological signals and environmental information to achieve a more natural and intelligent voice interaction. The team hopes that with further research and collaboration, the artificial larynx will benefit more people with voice disabilities and voice interaction.

Professor Ren Tian-ling's team from the School of Integrated Circuits of Tsinghua University has been committed to the fundamental research and practical application exploration of two-dimensional material devices for a long time, realizing innovation breakthroughs from materials, device structure, fabrication, system integration and other levels, focusing on the research of new micro and nano electronic devices that break the limitations of traditional devices. Many innovations have been made in new graphene acoustic devices and various sensor components. He has published many papers in well-known journals such as Nature, Nature Electronics, Nature Communications and other top international academic conferences in the field of International Electronic Device Conference (IEDM).

The article, titled "Mixed-modality speech recognition and interaction using a wearable artificial throat", was published online on Nature Machine Intelligence at 00:00 on February 24, 2023. The corresponding authors of the paper are Professor Ren Tian-ling, Associate Professor Tian He, Associate Professor Yang Yi from the School of Integrated Circuits of Tsinghua University, Professor Luo Qingquan from the School of Medicine of Shanghai Jiao Tong University, Yang Qisheng, a doctoral student from the School of Integrated Circuits, Tsinghua University, and Jin Weiqiu, a doctoral student from the School of Medicine, Shanghai Jiao Tong University as the co-first author. The project was supported by the National Natural Science Foundation of China, the Ministry of Science and Technology, the Fok Ying-Tong Education Foundation of the Ministry of Education, the Beijing Natural Science Foundation, the Guoqiang Research Institute of Tsinghua University, the Foshan Advanced Manufacturing Research Institute of Tsinghua University, the Tsinghua University-Toyota Joint Research Institute, and Tsinghua-Huafa Joint Research Institute of Architectural Optoelectronics Technology.

Full text link: https://www.nature.com/articles/s42256-023-00616-6

Editor: Li Han